Please note that this post, first published over a year ago, may now be out of date.

How secure is your EKS cluster? You may think: “I’m using a Kubernetes cluster managed by AWS, it must be secure, right?”

Not necessarily.

It’s true that AWS takes away the pain of managing and securing core Kubernetes components like the control plane and master nodes.

However, there are still lots of other Kubernetes components that you need to configure and secure when using EKS.

But despair not, there are tools that can help you assessing the security of your Kubernetes cluster and automatically flag vulnerabilities. In this blog post I’m going to show you one of these security tools called kube-bench, how to run security scans in your EKS cluster, and how to assess results.

If you want to move straight into the practical part and start using kube-bench, feel free to jump to that section.

AWS Shared Responsibility Model and EKS

Before diving into kube-bench, it is worth reviewing the AWS Shared Responsibility Model and how it applies to EKS.

The AWS Shared Responsibility Model is a core concept in AWS. In a nutshell, AWS is responsible for security of the cloud: this means protecting the infrastructure that runs all the services offered in AWS (regions and availability zones, hardware, networking, storage, etc.). On the other hand, customers (you!) are responsible for security in the cloud: this means updating and patching the operating system in EC2, configuring security groups, etc. This task varies a lot according to the AWS service you’re using: for example, a self-managed service like EC2 requires you to perform security configuration at the operating system layer whereas a fully managed services like S3 removes this type of tasks but still leaves you the responsibility to configure and manage your data and permissions.

EKS is a managed service and AWS is taking care of several core components of Kubernetes. This means you don’t need to worry about securing the control plane and components like the master nodes, etcd database, etc. This is a good thing and is the reason why lots of customers decide to go for EKS.

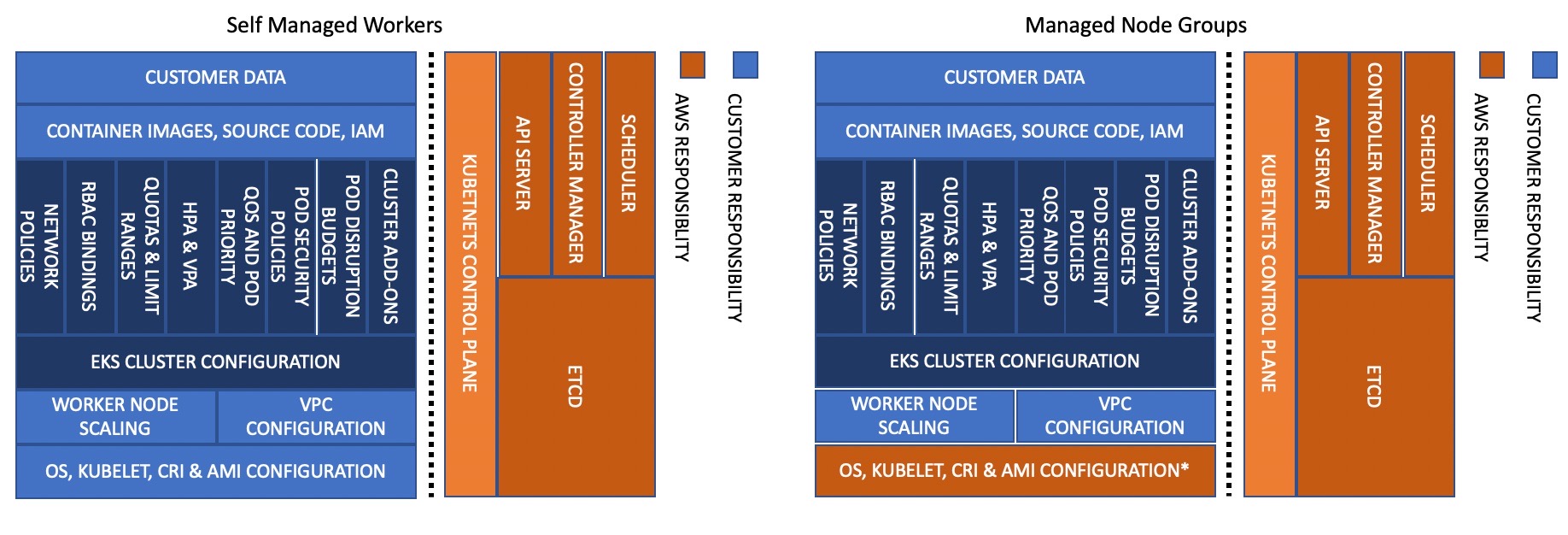

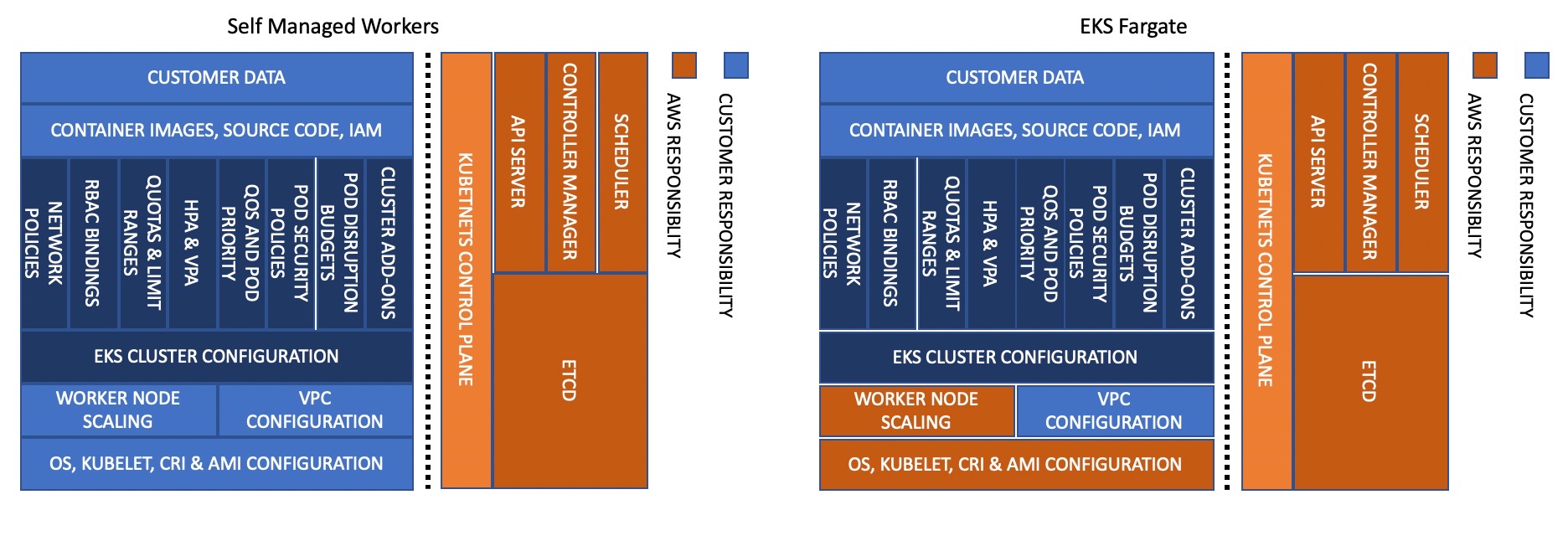

EKS comes in three flavours: self-managed workers, managed node groups, and EKS Fargate. The flavour of EKS you choose is going to affect the level of responsibility you have for your EKS cluster. For example, when you choose self-managed workers you are responsible for patching your worker nodes’ operating system whereas if you choose EKS Fargate you don’t need to worry about this at all.

The diagrams below show which Kubernetes components you and AWS need to secure according to the flavour of EKS you’re using:

Images from AWS documentation

Understanding these boundaries is important for assessing the results of security scans executed against your EKS cluster. For example, security checks against worker nodes are crucial for self-managed and managed nodes whereas they are completely out of scope for EKS Fargate.

kube-bench

We can now deep dive into kube-bench. This is an open source Go application that checks whether Kubernetes is deployed according to security best practices. It implements the CIS Kubernetes Benchmarks which are documents developed by the Center for Internet Security for running Kubernetes in a secure way. kube-bench can be executed against a self-managed Kubernetes cluster as well as Kubernetes clusters managed by popular cloud provider like AWS, Azure, Google Cloud, etc.

I’m going to run kube-bench against an EKS cluster, so these security checks reference the CIS Amazon EKS Benchmark. This is a benchmark customized for AWS which includes checks specific to EKS (e.g. relations with other AWS services like ECR, IAM, KMS, etc.) and excludes checks not relevant to EKS since AWS manages the components for you (e.g. control plane components and configurations). You can download the latest CIS Amazon EKS Benchmark from the CIS website and use it to get more information and assess any fail or warning messages reported by kube-bench.

You can choose to run kube-bench from inside a container (without even installing it), to run it as job in your EKS cluster, or to install and run the rpm/deb package directly in your worker nodes. The easiest way to get started is to run it as job in the EKS cluster, and kube-bench provides a ready-to-use manifest file job-eks.yaml which I reproduce here in a slightly modified version:

---

apiVersion: batch/v1

kind: Job

metadata:

name: kube-bench

spec:

template:

spec:

hostPID: true

containers:

- name: kube-bench

image: aquasec/kube-bench:latest

command: ["kube-bench", "--benchmark", "eks-1.0"]

volumeMounts:

- name: var-lib-kubelet

mountPath: /var/lib/kubelet

readOnly: true

- name: etc-systemd

mountPath: /etc/systemd

readOnly: true

- name: etc-kubernetes

mountPath: /etc/kubernetes

readOnly: true

restartPolicy: Never

volumes:

- name: var-lib-kubelet

hostPath:

path: "/var/lib/kubelet"

- name: etc-systemd

hostPath:

path: "/etc/systemd"

- name: etc-kubernetes

hostPath:

path: "/etc/kubernetes"

The job uses the latest container image from Aqua Security, another reputable AWS partner. However, you can also build your own image of kube-bench and push it to ECR within your AWS account (the job manifest file in the GitHub repo has comments on how to reference your ECR). The command executed in the job is:

command: ["kube-bench", "--benchmark", "eks-1.0"]

which runs all the security checks in the CIS Amazon EKS Benchmark (eks-1.0). Note that you can run a subset of checks, for example only the checks related to worker nodes by modifying the command to:

command: ["kube-bench", "node", "--benchmark", "eks-1.0"]

Run the job in your EKS cluster with:

kubectl apply -f job-eks.yaml

and check that the job ends successfully with the status Completed:

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default kube-bench-kwc2n 0/1 Completed 0 4s

...

The kube-bench report is generated in the logs of the completed job. You can output the logs to a local file as follows (replace kube-bench-kwc2n with your pod name):

kubectl logs kube-bench-kwc2n > assessment_1.log

The report generates an output similar to this (section 3 only):

[INFO] 3 Worker Node Security Configuration

[INFO] 3.1 Worker Node Configuration Files

[PASS] 3.1.1 Ensure that the proxy kubeconfig file permissions are set to 644 or more restrictive (Scored)

[PASS] 3.1.2 Ensure that the proxy kubeconfig file ownership is set to root:root (Scored)

[PASS] 3.1.3 Ensure that the kubelet configuration file has permissions set to 644 or more restrictive (Scored)

[PASS] 3.1.4 Ensure that the kubelet configuration file ownership is set to root:root (Scored)

[INFO] 3.2 Kubelet

[PASS] 3.2.1 Ensure that the --anonymous-auth argument is set to false (Scored)

[PASS] 3.2.2 Ensure that the --authorization-mode argument is not set to AlwaysAllow (Scored)

[PASS] 3.2.3 Ensure that the --client-ca-file argument is set as appropriate (Scored)

[PASS] 3.2.4 Ensure that the --read-only-port argument is set to 0 (Scored)

[PASS] 3.2.5 Ensure that the --streaming-connection-idle-timeout argument is not set to 0 (Scored)

[PASS] 3.2.6 Ensure that the --protect-kernel-defaults argument is set to true (Scored)

[PASS] 3.2.7 Ensure that the --make-iptables-util-chains argument is set to true (Scored)

[PASS] 3.2.8 Ensure that the --hostname-override argument is not set (Scored)

[PASS] 3.2.9 Ensure that the --event-qps argument is set to 0 or a level which ensures appropriate event capture (Scored)

[PASS] 3.2.10 Ensure that the --rotate-certificates argument is not set to false (Scored)

[PASS] 3.2.11 Ensure that the RotateKubeletServerCertificate argument is set to true (Scored)

== Remediations node ==

...

== Summary node ==

15 checks PASS

0 checks FAIL

0 checks WARN

0 checks INFO

If you have failed checks or checks with warnings, it it worth referring to the corresponding section in the CIS Amazon EKS Benchmark to understand what the check flagged and assess whether the issue is a security vulnerability, a false positive, or not relevant to your cluster.

The checks apply to the node that the job gets scheduled to, so if you have a mix of node configurations you’ll need to run a different job for each set of nodes that share a configuration (for example, use a nodeSelector in the pod template).

Once you are done, make sure to delete the pod where the job was executed as a new execution of kube-bench would require a new pod.

kubectl delete -f job-eks.yaml

Simulating security vulnerabilities

To check that kube-bench is correctly reporting security vulnerabilities, I’ll intentionally introduce a security vulnerability into my EKS cluster: I log in using SSH into a worker node, then change file permissions and ownership in the kubeconfig file:

sudo chmod 777 /var/lib/kubelet/kubeconfig

sudo chown ec2-user:ec2-user /var/lib/kubelet/kubeconfig

I re-run the job with kubectl apply -f job-eks.yaml and output the report to a new local file. This time the report shows failed checks for 3.1.1 (file permissions) and 3.1.2 (ownership) and suggests remediation steps:

[INFO] 3 Worker Node Security Configuration

[INFO] 3.1 Worker Node Configuration Files

[FAIL] 3.1.1 Ensure that the proxy kubeconfig file permissions are set to 644 or more restrictive (Scored)

[FAIL] 3.1.2 Ensure that the proxy kubeconfig file ownership is set to root:root (Scored)

[...]

== Remediations node ==

3.1.1 Run the below command (based on the file location on your system) on each worker node.

For example,

chmod 644 /var/lib/kubelet/kubeconfig

3.1.2 Run the below command (based on the file location on your system) on each worker node.

For example, chown root:root /var/lib/kubelet/kubeconfig

== Summary node ==

13 checks PASS

2 checks FAIL

0 checks WARN

0 checks INFO

Again, you can find more information about these checks and the remediation steps in the CIS Amazon EKS Benchmark.

Ideally, you want to send results to your CI/CD system to flag immediately any potential security risk. You can use the summary at the end of the report as it lists the total number of failed tests.

You can also integrate kube-bench with AWS Security Hub so that any fail or warning message automatically displays as a finding in the AWS console. If you’re interested in this option, check out these instructions and the related job manifest job-eks-asff.yaml.

Wrap-up

I hope this post made you aware of your responsibilities in terms of security when running an EKS cluster. If you have an existing EKS cluster, give kube-bench a go and run a security scan; it’s super easy to get started. You may even be surprised/horrified/pleased when you see the results!

Do you need expert advice on Kubernetes? We are a Kubernetes Certified Service Provider and have a wealth of experience with Kubernetes, EKS, and containers. Book a Kubernetes review today.

This blog is written exclusively by The Scale Factory team. We do not accept external contributions.